常見的7種K臂賭博機策略集合(下)

代碼部分是實戰demo。個人原創,禁止轉載。Doraemon:常見的7種K臂賭博機策略集合(上)上篇我們介紹了7種K臂賭博機策略的推導公式,今天我

代碼部分是實戰demo。個人原創,禁止轉載。

Doraemon:常見的7種K臂賭博機策略集合(上)上篇我們介紹了7種K臂賭博機策略的推導公式,今天我們看下如何用python實現它們。實際代碼中包含了10種策略。

策略類構建

第一塊代碼構建了一個類,采用pandas模擬的數值更新。該類包含策略及更新過程的模擬。

這里先說明一下,因為該代碼是將賭博機策略應用在了電商場景下,所以變量是以該場景下進行命名的。后面會有結果變量的解讀。

import randomnimport numpy as npnfrom scipy.stats import normnimport pandas as pdnimport mathnfrom tqdm import trangenn'''n用單步更新算法模擬強化學習在某一固定曝光位上推送優質商品的過程(該場景先驗分布為beta分布)n'''nnclass RL:n def __init__(self):n self.product_num = 10 # 品的數量n self.product_id = [i for i in range(1,self.product_num+1)] # 品的ID列表n self.product_ctr = [0.1,0.15,0.2,0.25,0.3,0.35,0.4,0.45,0.5,0.55] # 真實收益nn random.shuffle(self.product_id) # 隨機打亂品ID的順序n random.shuffle(self.product_ctr) # 隨機打亂品收益的順序n self.product_exp = [0 for i in range(self.product_num)] # 初始化品的曝光次數n self.product_click = [0 for i in range(self.product_num)] # 初始化品的點擊次數n self.product_weight = [1000 for i in range(self.product_num)] # 初始化品的權重n self.product_remark = [0 for i in range(self.product_num)] # 初始化品的備注項(貪心算法使用)n self.product_ha = [0 for i in range(self.product_num)] # 初始化偏好函數(梯度提升算法使用)n self.product_hap = [0 for i in range(self.product_num)] # 初始化偏好權重(梯度提升算法使用)n self.product_info = pd.DataFrame({'product_id':self.product_id,n 'product_ctr':self.product_ctr,n 'exp':self.product_exp,n 'click':self.product_click,n 'weight':self.product_weight,n 'remark':self.product_remark,n 'ha':self.product_ha,n 'hap':self.product_hap}) # 要更新的商品信息表nn self.times = 2000 # 實驗次數nnn def power_law(self,num,a,b):n '''n 收益往往遵循冪律分布n '''nn x = np.arange(1*10, (num+1)*10, 10) # 初始化數據點n # noise = norm.rvs(0, size=num, scale=0.1) # 正態噪聲n noise = [random.normalvariate(0, 0.1) for i in range(num)]n y = []n for i in range(len(x)):n y.append(10.8*pow(x[i],-0.3)+noise[i])nn # [a,b]歸一化n k = (b-a)/(max(y)-min(y))n result = [float(a+k*(z-min(y))) for z in y]nn return resultnnnn def product_is_click(self,pro_id):n '''n 模擬商品是否點擊n '''n ctr = self.product_info[self.product_info['product_id']==pro_id]['product_ctr'][0]nn result_random = random.uniform(0,1)nn if result_random <= ctr:n return 1 n else:n return 0n # return result_randomnnn def random_bandit(self):n '''n 【隨機】n --------------------------n '''n self.product_info['remark'] = self.product_info['click']/self.product_info['exp']n self.product_info.fillna(0,inplace=True)n self.product_info['weight'] = 0n random_pro_id = random.choice(self.product_id)n random_pro_idx = self.product_info[self.product_info['product_id'] == random_pro_id].indexn self.product_info.loc[random_pro_idx,'weight'] = 1000nnn def naive_bandit(self,max_ratio,now_times):n '''n 【樸素bandit算法】n 隨機選擇第一步n 更新曝光點擊n {更新remark的平均收益n 當前更新步數是否大于max_times最大隨機次數n 是否存在權重為10000的品:n 繼續n 否則n 權重歸0n 選擇收益最大的品,將該品權重設為10000n 否則n 權重歸0n 隨機選擇一個品,該品權重設為1000n 繼續}n --------------------------n max_ratio:隨機次數百分比n now_times:當前試驗次數n '''n self.product_info['remark'] = self.product_info['click']/self.product_info['exp']n self.product_info.fillna(0,inplace=True)n if now_times >= self.times*max_ratio:n if self.product_info['weight'].max() == 10000:n passn else:n self.product_info['weight'] = 0 n naive_pro_idx = random.choice(list(self.product_info[self.product_info['remark']==self.product_info['remark'].max()].index))n self.product_info.loc[naive_pro_idx,'weight'] = 10000n else:n self.product_info['weight'] = 0 n naive_pro_id = random.choice(self.product_id)n naive_pro_idx = self.product_info[self.product_info['product_id'] == naive_pro_id].indexn self.product_info.loc[naive_pro_idx,'weight'] = 1000nn def greedy(self,epsilon):n '''n 【epsilon-greedy算法】n 隨機選擇第一步n 更新曝光點擊n {更新remark的平均收益n 權重歸0n 隨機數(0,1)判斷n 小于隨機數,進行探索,隨機選擇品ID進行實驗,將探索品權重設為1000n 否則n 大于隨機數,進行利用,隨機選擇最大的remark值的品ID,將利用品權重設為1000}n --------------------------n epsilon:隨機探索的概率,1-epsilon為利用的概率n '''n self.product_info['remark'] = self.product_info['click']/self.product_info['exp']n self.product_info.fillna(0,inplace=True)n self.product_info['weight'] = 0 n if random.uniform(0,1) < epsilon:n greed_pro_id = random.choice(self.product_id)n greed_pro_idx = self.product_info[self.product_info['product_id'] == greed_pro_id].indexn self.product_info.loc[greed_pro_idx,'weight'] = 1000n else:n greed_pro_idx = random.choice(list(self.product_info[self.product_info['remark']==self.product_info['remark'].max()].index))n self.product_info.loc[greed_pro_idx,'weight'] = 1000nnn def auto_greedy(self,now_times):n '''n 【epsilon-greedy算法】n 隨機選擇第一步n 更新曝光點擊n {更新remark的平均收益n 權重歸0n 隨機數(0,1)判斷n 小于隨機數,進行探索,隨機選擇品ID進行實驗,將探索品權重設為1000n 否則n 大于隨機數,進行利用,隨機選擇最大的remark值的品ID,將利用品權重設為1000}n --------------------------n now_times:當前嘗試次數n epsilon:隨機探索的概率,1-epsilon為利用的概率,根據1/sqrt(t)更新n '''n epsilon = 1/np.sqrt(now_times+1)n self.product_info['remark'] = self.product_info['click']/self.product_info['exp']n self.product_info.fillna(0,inplace=True)n self.product_info['weight'] = 0 n if random.uniform(0,1) < epsilon:n greed_pro_id = random.choice(self.product_id)n greed_pro_idx = self.product_info[self.product_info['product_id'] == greed_pro_id].indexn self.product_info.loc[greed_pro_idx,'weight'] = 1000n else:n greed_pro_idx = random.choice(list(self.product_info[self.product_info['remark']==self.product_info['remark'].max()].index))n self.product_info.loc[greed_pro_idx,'weight'] = 1000nnn def softmax(self,tow):n '''n 【采用Boltzmann分布進行品采樣】n 隨機選擇第一步n 更新曝光點擊n {更新remark的平均收益n 權重歸0n 計算Boltzmann分布概率n 根據概率選品n 將品權重設為1000}n --------------------------n tow:溫度,值越小,越趨近利用,值越大,越趨近探索n '''n self.product_info['remark'] = self.product_info['click']/self.product_info['exp']n self.product_info.fillna(0,inplace=True)n self.product_info['weight'] = 0 n boltzmann_p = np.array(list(np.exp(self.product_info['remark']/tow)/np.exp(self.product_info['remark']/tow).sum()))n softmax_pro_id = np.random.choice(list(self.product_info['product_id']), p = boltzmann_p.ravel())n softmax_pro_idx = self.product_info[self.product_info['product_id'] == softmax_pro_id].indexn self.product_info.loc[softmax_pro_idx,'weight'] = 1000nnn def ucb(self,c,now_times):n '''n https://zhuanlan.zhihu.com/p/52964567n ucb = ctr_avg + c*sqrt(log(t)/n)n --------------------------n c:為超參,c越小,收斂越快n now_times:當前試驗次數n '''n ucb_reward = self.product_info['click']/self.product_info['exp']n ucb_explore = c*np.sqrt(np.log(now_times+1)/self.product_info['exp'])n ucb_weight = ucb_reward + ucb_exploren self.product_info['weight'] = ucb_weightnnn def ucb1(self):n '''n 【UCB算法】n ucb1 = ctr_avg + sqrt(2*ln(n_total)/n)n --------------------------n '''n ucb_reward = self.product_info['click']/self.product_info['exp']n ucb_explore = np.sqrt(2 * np.log(self.product_info['exp'].sum()))/self.product_info['exp']n ucb_weight = ucb_reward + ucb_exploren self.product_info['weight'] = ucb_weightnnn def thompson_sampling(self):n '''n 【湯普森采樣算法】n np.random.beta(1+success,1+(total-success))n --------------------------n '''n self.product_info['weight'] = np.random.beta(1+self.product_info['click'],1+(self.product_info['exp']-self.product_info['click']))nnn def bayesian_ucb(self,c):n '''n 【基于beta分布的貝葉斯優化UCB】n https://zhuanlan.zhihu.com/p/218398647n --------------------------n c:有多少個標準單位考慮置信上界,越大越趨近探索,越小越趨近利用,值為0即純貪心算法n '''n ucb_reward = self.product_info['click']/self.product_info['exp']n ucb_explore = np.random.beta(1+self.product_info['click'],1+(self.product_info['exp']-self.product_info['click']))n ucb_weight = ucb_reward + c*ucb_exploren self.product_info['weight'] = ucb_weightnnn def gradient_bandit(self,a):n '''n 【梯度提升偏好算法】n https://zhuanlan.zhihu.com/p/44325923n 更新hapn 選品n 更新曝光/點擊/收益n 更新han --------------------------n a:0.25n '''n self.product_info['weight'] = 0n # 更新hapn self.product_info['hap'] = np.e**self.product_info['ha']/(np.e**self.product_info['ha']).sum()n # 選品n gradient_pro_id = np.random.choice(list(self.product_info['product_id']), p = np.array(self.product_info['hap']).ravel())n gradient_pro_idx = self.product_info[self.product_info['product_id'] == gradient_pro_id].indexn self.product_info.loc[gradient_pro_idx,'weight'] = 1 # 選此次要曝光的品n # 更新商品信息表的順序n self.product_info.fillna(1000,inplace=True)n self.product_info.sort_values(by='weight',ascending=False,inplace=True)n self.product_info.reset_index(drop=True,inplace=True)n # 更新曝光n exp_old = self.product_info.loc[0,'exp']n exp_new = exp_old+1n self.product_info.loc[0,'exp'] = exp_newn # 更新點擊n tmp_id = int(self.product_info.loc[0,'product_id'])n click_old = self.product_info.loc[0,'click']n click_increment = self.product_is_click(tmp_id)n click_new = click_old + click_incrementn self.product_info.loc[0,'click'] = click_newn # 更新實時CTRn self.product_info['remark'] = self.product_info['click']/self.product_info['exp'] n self.product_info.fillna(0,inplace=True)n # 計算總體CTRn average_reward = self.product_info['click'].sum()/self.product_info['exp'].sum()n # print(average_reward,'n',self.product_info)n # 更新各個品的preferencen # print(self.product_info['ha'][0],'+',a,'*(',click_increment,'-',average_reward,')*(',self.product_info['weight'][0],'-',self.product_info['hap'][0],')')n self.product_info['ha'] = self.product_info['ha'] + a*(click_increment-average_reward)*(self.product_info['weight']-self.product_info['hap'])n # print('=',self.product_info['ha'][0])nnnn def record(self,indicator):n '''n 記錄收斂情況n grade_exp_prop:最佳商品曝光占比n accumulate_reward_avg:平均累積獎賞n '''n if indicator == 'grade_exp_prop':n grade_id = self.product_info[self.product_info['product_ctr'] == self.product_info['product_ctr'].max()]['product_id'].values[0]n grade_id_exp = self.product_info[self.product_info['product_id'] == grade_id]['exp'].values[0]n total_exp = self.product_info['exp'].sum()n grade_exp_prop = round(grade_id_exp/total_exp,4) n result = grade_exp_propn elif indicator == 'accumulate_reward_avg':n accumulate_reward_avg = self.product_info['click'].sum()/self.product_info['exp'].sum()n result = accumulate_reward_avgn else:n raise ValueError('indicator name error')nn return resultnnn def update(self,method,parameter,i):n '''n 更新數據n '''n # 更新曝光n exp_old = self.product_info.loc[0,'exp']n exp_new = exp_old+1n self.product_info.loc[0,'exp'] = exp_newnn # 更新點擊nn tmp_id = int(self.product_info.loc[0,'product_id'])nn click_old = self.product_info.loc[0,'click']n click_increment = self.product_is_click(tmp_id)n click_new = click_old + click_incrementn self.product_info.loc[0,'click'] = click_newnn if method == 'random_bandit':n self.random_bandit()n elif method == 'naive_bandit':n self.naive_bandit(parameter,i)n elif method == 'greedy':n self.greedy(parameter)n elif method == 'auto_greedy':n self.auto_greedy(i)n elif method == 'softmax':n self.softmax(parameter)n elif method == 'ucb':n self.ucb(parameter,i)n elif method == 'ucb1':n self.ucb1()n elif method == 'thompson_sampling':n self.thompson_sampling()n elif method == 'bayesian_ucb':n self.bayesian_ucb(parameter)n else:n raise ValueError('method error')nn # 更新商品信息表的順序n self.product_info.fillna(1000,inplace=True)n self.product_info.sort_values(by='weight',ascending=False,inplace=True)n self.product_info.reset_index(drop=True,inplace=True)nnn def iteration(self,method,parameter=0):n '''n 更新迭代n '''n grade_exp_prop_lst = []n accumulate_reward_avg_lst = []n # print(self.product_info)n for i in range(self.times):n if method == 'gradient_bandit':n self.gradient_bandit(parameter)n else:n self.update(method,parameter,i)n grade_exp_prop_lst.append(self.record(indicator='grade_exp_prop'))n accumulate_reward_avg_lst.append(self.record(indicator='accumulate_reward_avg'))n print(method)n print(self.product_info)nn return grade_exp_prop_lst,accumulate_reward_avg_lst

執行代碼

第二塊代碼則是執行,輸出每種策略的輸出結果。

if __name__ == '__main__':n random_num = 16n random.seed(random_num)n rl_model1 = RL()n random_exp_prop,random_reward_avg = rl_model1.iteration('random_bandit')n random.seed(random_num)n rl_model2 = RL()n naive_exp_prop,naive_reward_avg = rl_model2.iteration('naive_bandit',parameter=0.3)tn random.seed(random_num)n rl_model3 = RL()n greedy_exp_prop,greedy_reward_avg = rl_model3.iteration('greedy',parameter=0.3)n random.seed(random_num)n rl_model4 = RL()n agreedy_exp_prop,agreedy_reward_avg = rl_model4.iteration('auto_greedy')n random.seed(random_num)n rl_model5 = RL()n softmax_exp_prop,softmax_reward_avg = rl_model5.iteration('softmax',parameter=0.13)n random.seed(random_num)n rl_model6 = RL()n ucb_exp_prop,ucb_reward_avg = rl_model6.iteration('ucb',parameter=0.25)n random.seed(random_num)n rl_model7 = RL()n ucb1_exp_prop,ucb1_reward_avg = rl_model7.iteration('ucb1')n random.seed(random_num)n rl_model8 = RL()n ts_exp_prop,ts_reward_avg = rl_model8.iteration('thompson_sampling')n random.seed(random_num)n rl_model9 = RL()n bayes_exp_prop,bayes_reward_avg = rl_model9.iteration('bayesian_ucb',parameter=3)n random.seed(random_num)n rl_model10 = RL()n gradient_exp_prop,gradient_reward_avg = rl_model10.iteration('gradient_bandit',parameter=0.25)

結果解讀

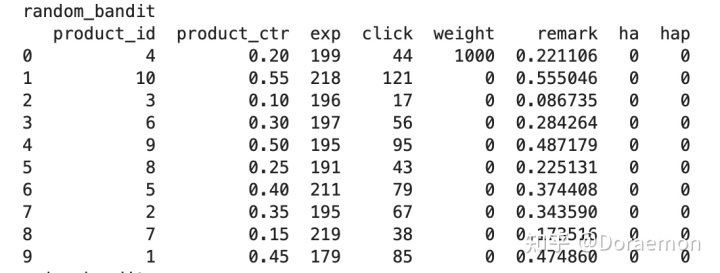

下方為代碼執行結果示例:

product_id為臂ID,product_ctr為真實收益,exp為搖臂的次數,click為獲得收益的次數,remark為當前實時收益。

歡迎關注~一個醫學生(預防醫學)的數據成長之路。。。